An Approach to a Unified DDI Environment

Preamble

Whilst this technical write up around why a specific DDI (DNS, DHCP & IP Address Management) was chosen is a couple of years old we are seeing network refreshes starting to occur and administrators are once again grasping with the mixed environments of Linux and Windows managing their DNS and DHCP environments.

Of course the PlexNet solution is to always do what is within budget and what causes the least disruption to the environment. Some organisations just aren’t ready or capable to do complete swap out of technologies and conversely others are experiencing so many issues with their hybrid environments (or no management system) that they are looking for a turnkey solution.

From a simple overlay for Linux DNS and Microsoft DHCP to a complete refresh the example from McGill University below is a pertinent example of what people are facing today.

The case study below written by Chung Yu (chung.yu2@mcgill.ca) and was originally published on Monday, March 27, 2017.

Key Takeaways

- McGill’s IT project went beyond technology updates to a complete architectural redesign to create a network that will serve the university well into the future.

- Working with a vendor and its support team, the McGill team reorganized the IP address scheme and improved and centralized DNS and DHCP services, while still letting faculties retain some control over their subnets.

- Although not without challenges, the infrastructure transformation succeeded and brought with it many lessons learned that can help other universities embarking on a similar journey.

To reimagine its approach to technology and achieve greater operational efficiency, McGill University embarked on a complete refresh of its IT infrastructure, which will include purchasing the latest access switch, router, and firewall technology. Beyond simply changing old hardware for new hardware, the Network and Communications Services (NCS) team completely redesigned the system’s architecture to create a state-of-the-art network that will serve the university for many years to come.

McGill University has two main campuses and many network connections to research centers, hospitals, and other academic institutions. With students, staff, faculty, and partners, the university’s total population is nearly 50,000 users. The existing infrastructure was implemented over 10 years ago. While this network is serving the community, our equipment is coming to the end of its service life, and we will upgrade to a much faster network topology.

Glossary

- DNS: Domain Name System

- DHCP: Dynamic Host Configuration Protocol

- IPAM: Internet Protocol Address Management

- DDI: DNS/DHCP/IPAM

- RPZ: Response Policy Zone

- IPv4: Internet Protocol version 4

- IPv6: Internet Protocol version 6

- DNSSEC: Domain Name System Security

- API: Application Programing Interface

- IoT: Internet of Things

- BYOD: Bring Your Own Device

IP Space Planning and Restructuring

The McGill infrastructure combines both large public IP space and private IP space. The NCS team manages three Full Public Class B spaces and a handful of Public Class C IP spaces, as well as the RFC 1917 private IP space for the university and its affiliates.

Over many years, the university’s IPv4 address space was distributed somewhat haphazardly; given the vast number of available addresses, no real planning occurred early on, and there was little understanding of the problems that would result from mismanaged IP space. As time went on, it became evident that network growth requires much more management. It also became clear that, by revisiting how IP address space was structured, we could become much more efficient in managing the network’s IP access control list (ACL), thereby improving the routing architecture and increasing our security posture. Rolling out a new network that fully supports IPv6 is also a part of our plan.

Project Challenges

McGill’s previous administration had many separate IT units to support its widely varying faculties. Several years ago, NCS was organized to consolidate IT services and improve efficiency. However, many faculties have retained LAN administrative units while still relying on the NCS team for network and server resources. As a result, service duplication has been a problem, including in the Domain Name System (DNS) and Dynamic Host Configuration Protocol (DHCP). Further, none of the LAN units had any type of IP management system beyond an Excel spreadsheet — and often, these were outdated or had the wrong information.

Our NCS team wanted to reorganize the university’s IP addressing scheme while also improving DNS and DHCP services. Goals included consolidating DNS and DHCP to centralized servers, while letting faculties retain some control on the subnets they manage.

Solution: Unified DDI

Although McGill is a university, it often behaves more like a small ISP. We have huge amount of IP space; a wide variety of both commercial and consumer-grade network services; and a wide variety of customers, including faculty, academics, resident and off-campus students, hospital staff, library staff, and researchers. We needed to partner with a vendor that could provide a high-performing system and still meet our target budgets. Also, as a public institution, we have very stringent purchasing rules.

Our goal was to find a unified DDI solution — that is, DNS, DHCP, and IP Address Management (IPAM) — to fulfill the following requirements:

- Ensure IPAM for IPv4 and IPv6

- Improve DHCP services with security and auditing features

- Improve DNS architecture (internal/external and cache layer)

- Improve DNS firewall features

- Ensure that Domain Name System Security Extensions (DNSSEC) is easy to manage

- Delegate rights and privileges granularly, based on Active Directory groups

- Audit and log user activity

- Allow historical tracking of IP information

- Provide network discovery and management tools

Armed with a list of critical features, we narrowed our search to the leading DDI providers based on Gartner reviews of them. InfoBlox, BlueCat, and EfficientIP met our essential requirements. To further evaluate their products beyond our required features, we conducted a proof-of-concept (PoC) with each to see not only how well they performed but also to evaluate the ease of managing their systems, their integration with current DHCP and DNS, network discovery and management, and several other DDI functionalities. All three vendors performed well on basic DDI functions. However, EfficientIP’s management interface better tied together the different systems, and its global search and filtering features performed exceptionally well.

Implementation and Training

Once we solidified the deal, our team began network design discussions with EfficientIP. We also opted to work with their Professional Services (PS) team, as migrating the DNS and DHCP to their platform was too big for our team to handle without support. The network plan had several critical project milestones; the new DDI solution was a key element that had to be implemented as soon as possible for several reasons: to

- offer a “soak period” for all NCS admins to get comfortable using the new systems;

- inform the faculty LAN admin units of the new product and give them controlled access;

- integrate with our current tools and management scripts;

- clean up the current IP space, including bad DNS entries, unused IP addresses, and old static entries;

- reallocate private IP space for the IPv4 network; and

- plan and allocate IPv4 to map with future IPv6 network divisions.

As part of the transition from a traditional BIND and Microsoft DNS and DHCP environment to a unified DDI system, we asked EfficientIP to provide a basic training course for NCS admins. Although this course offered a foundation for working with the new system, it was not in-depth enough; amalgamating so many services on a single platform required more training than just the basics.

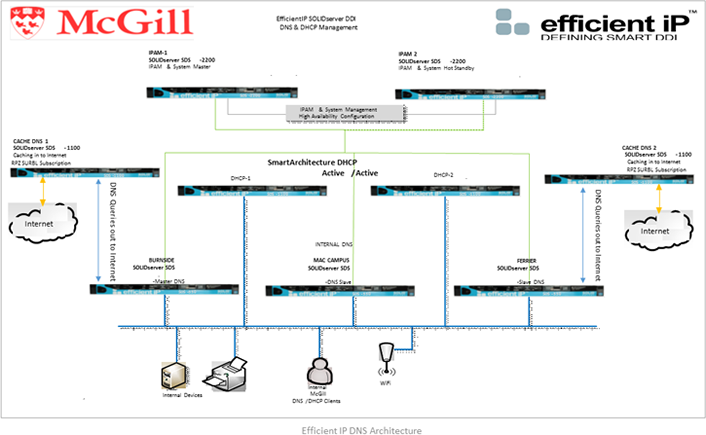

However, the McGill team picked up considerable information and skills while working with the PS team, and the presales support engineering team also helped us during the transition period. Further, following the classroom training, the company often supported us through Go-2-Meeting sessions, which was invaluable in our learning how to manage and troubleshoot the new system. Figure 1 shows the new DNS system’s architecture.

Implementation Issues

Multiple issues challenged smooth implementation of the new system, as follows.

DNS Issues

Our original DNS infrastructure was based on BIND DNS servers. The university historically had a large public IP space; security of the DNS was not part of the original design. However, as the threats from the Internet grew and our continued transition from public IP to private IP space was taking place, our current DNS design was clearly outdated.

To address the issue, the pre-sales team from EfficientIP had provided multi-tiered DNS architecture — internal DNS, external DNS, RPZ DNS, and DNS cache servers. When we implemented this design, we found it overly complex to manage and lacking good performance. We had to rework the design after implementation with the Efficient IP professional services team. They were able to simplify the design and improve the performance of the DNS services.

DHCP Issues

Converting Microsoft Lync DHCP option fields from Microsoft DHCP to the Internet Systems Consortium (ISC) DHCP proved challenging. Although the vendor had configured the setting for previous clients, we hit a problem with certificate renewals. This issue was not apparent during the initial PoC; it only became apparent later, during our initial rollout.

Resolving this issue required several support calls and internal testing and troubleshooting to determine the failure’s root cause. Ultimately, we could not resolve this internally using the available vendor documentation, so the PS team stepped in and solved the problem by correcting the configuration.

We also had some (not unexpected) confusion with the DHCP leases during the initial cut-over. The PS team recommended lowering all the DHCP lease times gradually prior to cut-over. This would set all Microsoft DHCP leases to expire at a specific hour on the cut-over date to avoid IP address conflicts between old Microsoft addresses and the new ones issued by EfficientIP.

Finally, while looking at the EfficientIP leases tables, many of our wireless access points (APs) did not appear on the DHCP; this issue was resolved by rebooting every wireless AP.

DNS Issues

Our initial design had DNS services running on the IPAM servers, which acted as the DNS Master and supported the DNS firewall as a choke point for DNS traffic. However, this design led to severe performance issues for IPAM management services. While user traffic and services were not affected, on the management side the problem was very noticeable. To resolve this performance issue, we had to redesign the DNS architecture, moving the DNS firewall to another layer and removing the DNS service from the IPAM servers.

NetChange Issues

McGill University’s very large network consists of thousands of access switches, routers, and firewalls. Early on in the NetChange network management system (NMS) rollout, we noticed two key issues that did not appear during the PoC.

First, polling all the network devices took a toll on IPAM service performance; to improve it, we divided the devices into classes and polled the classes at separate intervals. Second, the NetChange NMS occasionally associated IP addresses to Internet hostnames that were not part of the McGill network. This issued has been opened with the vendor development team.

DDI Performance

The project met our expectations. We had bumps on the road to migration, but we worked with the vendor’s team to solve all the issues. As we expand our use of the system and better understand the integrated platform at our disposal, we are quickly improving our ability to support our ever-growing and changing network.

A primary goal of moving to a centralized DDI platform was to quickly clean up stale IP information and find gaps in the existing IP management spreadsheets. Moving to the IPAM is helping the NCS team reclaim many IP subnets that were provisioned but never used. Many IP management tasks that previously required multiple service desk tickets (IP/DHCP and DNS) now require a single change request. As we further develop our DDI system, we will give system administrators greater access to our service desk to make controlled changes and alleviate the workload.

The great differentiator between our vendor and its competitors is search capabilities. Using EfficientIP search, we can quickly locate information and resolve IP support trouble tickets. The reporting capabilities are a great improvement over our original BIND and Windows servers. We now have a much better view of internal and external DNS and DHCP traffic. This supports our strategy plans for both our wired and wireless networks.

The security modules and the appliances’ onboard firewalls support information security initiatives to enhance network security, while the blacklist feeds and custom DNS response policy zones (RPZ) provide a better security posture for critical infrastructure services.

Gaps in Technical Support

One of the main issues we have faced since transitioning to the new DDI system is technical support for trouble tickets. The vendor website is quite weak in technical documentation on how to best configure their platform. The single published administrator guide provides basic information on different module configurations, but documentation on features is inadequate for troubleshooting. As a result, we often must open a ticket with the EfficientIP support desk to find answers and resolve issues.

Without documents to help us easily solve problems, we must either open a case or take a “just try it out” approach. At times, we can do the latter safely in our test virtual machine, but at other times we must try out approaches in the live environment.

Also, while the vendor demonstrated various customizable IPAM system features, we need further training to leverage these modules. With other technology, we have simply used the technical support sites or examples of other builds to learn such things.

Lessons Learned

Although we successfully transitioned away from Microsoft DHCP/DNS and BIND, the process of moving to a completely new IP management system was punctuated by the following important lessons:

- Plan the cut-over. Importing various IP address sources was more complicated than it originally seemed. Rather than just moving data from one source (spreadsheets) to another source (database), the process was more involved, but it gave us a good opportunity to examine possible IPAM structures.

- Set up the IP structure for future provisioning. Without good visibility of the IP space, it can easily devolve back into an information mess.

- Plan for considerable post-implementation work. After system implementation, we faced two huge tasks: cleaning up old information and training LAN admins to input the updates.

- Reassign responsibilities. Previously, managing the DHCP and the DNS were separate tasks divided between the network and enterprise applications teams. When we merged the DNS and DHCP, we had to redraw the responsibilities of various units. Also, with a proper IP address management tool, all units are now responsible for maintaining up-to-date IP address information.

- Understand module interactions. Troubleshooting a unified DDI system requires a good understanding of how the three modules interact.

- Don’t underestimate DHCP. Our biggest concern during the transition was moving the BIND DNS to a new platform; in actuality, moving the DHCP services proved more difficult. One problem was that we underestimated our DHCP use. Also, our Microsoft DHCP services held up well and were easy to configure, but moving to ISC DHCP actually caused several issues because of how EfficientIP configures DHCP options. As a result, we spent a lot of time after cut-over troubleshooting DHCP services.

- Evaluate support services before you commit. When considering vendors, it is as important to evaluate their support services as it is to evaluate the product itself. In our case, we did not open any support tickets until after we went into production; only then did we realized that while the support engineers were very well trained, the support team was smaller than with traditional network vendors. Also, while the vendor staff was available for the required 24-hour support, a ticket might be picked up by a support engineer in another time zone. That said, the EfficientIP team resolved all trouble tickets involving a network incident.

Recommendations

Once properly implemented, a unified DDI infrastructure has many benefits: it saves time in change requests, provides much better network and namespace visibility, and improves security and service availability to the overall network.

However, when planning for a DDI platform implementation, the team must understand both current usage and future forecasts of growth. We have noticed an exponential growth in our wireless network, which subsequently impacts the DHCP servers. With Internet of Things, bring-your-own, and conventional enterprise devices, DHCP services are highly critical. With DDI, we can now push all of that data into the DNS, IPAM, and network-managed system. However, you may not want all that data. Strategic network planning is therefore important, including the question: Just how much data and logs does your organization want to retain?

For larger campuses planning for IPv6 networks deployment, such a deployment in itself will be a huge task. Plan for your DHCP and DNS servers to handle both IPv4 and IPv6 requests.

A good DDI platform will easily accommodate the technical needs of universities; however, if you have many decentralized LAN admin units, plan for additional training to bring them aboard the centralized system. For us, early communication with various faculties that ran independent DNS and/or DHCP services was very important. Many were willing to relinquish their old DHCP or DNS if they had the same rights in the new environment.

Finally, post-implementation involved much more than simply adding new diagrams and how-to documents. Indeed, it has been an ongoing effort of selling the product to other teams, training them to manage their own subnets, re-establishing roles and responsibilities, and, of course, managing new network documentation.

Future Plans

The DDI platform will be a key asset during the evolution of the McGill network. We fully intend to leverage the API plug-ins and use the DNS RPZ firewalls, DNSSEC, and workflow features. Our immediate plans are to

- apply the Rest API plugins to support automation;

- improve DNS security through blacklist feeds, custom RPZ firewall zones, and begin use of DNSSEC;

- plan out the IPv6 address space;

- redraw the IPv4 network landscape, segmenting the network into additional private IP space and reclaiming unused public IP addresses; and

- delegate work functions to faculty LAN units.

Now and in the future, we are committed to providing all IT units greater security, visibility, and functionality on the network and applications they support and deploy.

Chung Yu is a Senior System Administrator, Network Infrastructure, McGill University.

REFERENCE: https://er.educause.edu/articles/2017/3/mcgill-universitys-network-transformation

Leave a Reply